Archive for category Discussion

SAMM and the Financial Services Industry

Posted by Eoin Keary in Discussion on August 24th, 2010

SAMM and the financial services industry:

I have conducted, sold and project managed SAMM engagements to financial service (FS) organisations throughout Europe over the past years. It is obvious the demand is growing for such services. The rise in demand of “security@source” be it via code review, secure development or grey box penetration testing and a supporting framework to tie it all together and understandably so as SAMM is one of the first pragmatic benchmarking and assessment frameworks for the somewhat ancient “Security in the SDLC” challenge.

The financial services industry is the perfect work stream for frameworks like SAMM. Financial services are widely known as the an area which invests heavily in areas such as information security, it’s heavily regulated (some say not heavily enough) and a daily challenge to FS is to maintain leading edge security but manage costs and usability whilst also being compliant with industry regulations, corporate governance and local/regional/global legislation.

SAMM covers four domains which in turn have sub domains. These four “pillars” attempt to examine all aspects of software development, all external catalysts which may result in either making security more robust or result in weakness.

The beauty of SAMM is its simplicity:

It would be naive to assume SAMM is a “silver bullet” in terms of SDLC assessment but it is a very pragmatic solution to a rather complex ecosystem.

The questionnaire is simple and effective assuming knowledge of secure application development as it can sometimes be open to misinterpretation. One of the key challenges in developing SAMM was delivery/authoring of the questionnaire. The risk being that individuals being questioned may misunderstand the questions.

The key to an accurate SAMM effort is [in audit speak] to procure a decent “sample space”. Sample space is a function of the amount and the diversity [roles within the SDLC] of the individuals interviewed by the SAMM reviewer. Accuracy of the answers given is also important as you shall get divergence based on role within the organisation.

Road map definition is also a challenge and knowledge of what are the focus points for the organisation being assessed are very important to develop the roadmap. [E.g. A financial services organisation may focus on regulatory and compliance issues but a software development house may not so much.] A principal benefit of SAMM is the ability to define a high level roadmap and drilling down into each activity in order to define what is required to reach the required SAMM level for a given domain.

Resources posted and SAMM in XML

Posted by Pravir Chandra in Discussion on August 21st, 2010

Over the course of the past year, many people have contributed resources related to SAMM (via the mailing list primarily) and we haven’t had them in an easy-to-find place. Well, that’s all changed now. The new Download page now has all the resources neatly organized for people to download, use, and extend. If you have created any other resources (or made improvements to any that we have posted) please to ping the mailing list with the updates and we’ll link them from this page.

Over the course of the past year, many people have contributed resources related to SAMM (via the mailing list primarily) and we haven’t had them in an easy-to-find place. Well, that’s all changed now. The new Download page now has all the resources neatly organized for people to download, use, and extend. If you have created any other resources (or made improvements to any that we have posted) please to ping the mailing list with the updates and we’ll link them from this page.

One of the other new items is a full XML version of the SAMM 1.0 framework document. It includes all the content from the whole SAMM document, so now it should be a lot simpler to build tools and automation around the model itself (not to mention making translations into other languages a lot simpler).

OpenSAMM 1.0 in Japanese

Posted by Pravir Chandra in Discussion, Releases on April 7th, 2010

Masaki Kubo at JPCERT undertook the great effort to translate the SAMM 1.0 document into Japanese. It’s available here. I’d like to thank him and JPCERT very much for the effort and the motivation to drive this to completion. Fantastic work!

It’s been a little while since I’ve posted anything to the site, but don’t mistake that for lack of activity! There’s actually a backlog of contributed resources that I’ve been meaning to post here but haven’t had the time to get it done yet. They’re all available via the mailing list with a little digging, but in the next week or two, we’ll try to get them all up here.

Jeremy Epstein on the Value of a Maturity Model

Posted by admin in Discussion on June 18th, 2009

Security maturity models are the newest thing, and also a very old idea with a new name. If you look back 25 years to the dreaded Orange Book (also known as the Trusted Computer System Evaluation Criteria or TCSEC), it included two types of requirements – functional (i.e., features) and assurance. The way Orange Book specified assurance is through techniques like design documentation, use of configuration management, formal modeling, trusted distribution, independent testing, etc. Each of the requirements stepped up as the system moved from the lowest levels of assurance (D) to the highest (A1). Or in other words, to get a more secure system, you need a more mature security development process.

Security maturity models are the newest thing, and also a very old idea with a new name. If you look back 25 years to the dreaded Orange Book (also known as the Trusted Computer System Evaluation Criteria or TCSEC), it included two types of requirements – functional (i.e., features) and assurance. The way Orange Book specified assurance is through techniques like design documentation, use of configuration management, formal modeling, trusted distribution, independent testing, etc. Each of the requirements stepped up as the system moved from the lowest levels of assurance (D) to the highest (A1). Or in other words, to get a more secure system, you need a more mature security development process.

As an example, independent testing was a key part of the requirement set – for class C products (C1 and C2) vendors were explicitly required to provide independent testing by “at least two individuals with bachelor degrees in Computer Science or the equivalent. Team members shall be able to follow test plans prepared by the system developer and suggest additions, shall be familiar with the ‘flaw hypothesis’ or equivalent security testing methodology, and shall have assembly level programming experience. Before testing begins, the team members shall have functional knowledge of, and shall have completed the system developer’s internals course for, the system being evaluated.” [TCSEC section 10.1.1] Further, “The team shall have ‘hands-on’ involvement in an independent run of the tests used by the system developer. The team shall independently design and implement at least five system-specific tests in an attempt to circumvent the security mechanisms of the system. The elapsed time devoted to testing shall be at least one month and need not exceed three months. There shall be no fewer than twenty hands-on hours spent carrying out system developer-defined tests and test team-defined tests.” [TCSEC section 10.1.2] The requirements increase as the level of assurance goes up; class A systems require testing by “at least one individual with a bachelor’s degree in Computer Science or the equivalent and at least two individuals with masters’ degrees in Computer Science or equivalent” [TCSEC section 10.3.1] and the effort invested “shall be at least three months and need not exceed six months. There shall be no fewer than fifty hands-on hours per team member spent carrying out system developer-defined tests and test team-defined tests.”

In the past 25 years since the TCSEC, there have been dozens of efforts to define maturity models to emphasize security. Most (probably all!) of them are based on wishful thinking: if only we’d invest more in various processes, we’d get more secure systems. Unfortunately, with very minor exceptions, the recommendations for how to build more secure software are based on “gut feel” and not any metrics.

In early 2008, I was working for a medium sized software vendor. To try to convince my management that they should invest in software security, I contacted friends and friends-of-friends in a dozen software companies, and asked them what techniques and processes their organizations use to improve the security of their products, and what motivated their organizations to make investments in security. The results of that survey showed that there’s tremendous variation from one organization to another, and that some of the lowest-tech solutions like developer training are believed to be most effective. I say “believed to be” because even now, no one has metrics to measure effectiveness. I didn’t call my results a maturity model, but that’s what I found – organizations with radically different maturity models, frequently driven by a single individual who “sees the light”. [A brief summary of the survey was published as “What Measures do Vendors Use for Software Assurance?” at the Making the Business Case for Software Assurance Workshop, Carnegie Mellon University Software Engineering Institute, September 2008. A more complete version is in preparation.]

So how do security maturity models like OpenSAMM and BSIMM fit into this picture? Both have done a great job cataloging, updating, and organizing many of the “rules of thumb” that have been used over the past few decades for investing in software assurance. By defining a common language to describe the techniques we use, these models will enable us to compare one organization to another, and will help organizations understand areas where they may be more or less advanced than their peers. However, they still won’t tell us which techniques are the most cost effective methods to gain assurance.

Which begs the question – which is the better model? My answer is simple: it doesn’t really matter. Both are good structures for comparing an organization to a benchmark. Neither has metrics to show which techniques are cost effective and which are just things that we hope will have a positive impact. We’re not yet at the point of VHS vs. Betamax or BlueRay vs. HD DVD, and we may never get there. Since these are process standards, not technical standards, moving in the direction of either BSIMM or OpenSAMM will help an organization advance – and waiting for the dust to settle just means it will take longer to catch up with other organizations.

Or in short: don’t let the perfect be the enemy of the good. For software assurance, it’s time to get moving now.

About the Author

Jeremy Epstein is Senior Computer Scientist at SRI International where he’s involved in various types of computer security research. Over 20+ years in the security business, Jeremy has done research in multilevel systems and voting equipment, led security product development teams, has been involved in far too many government certifications, and tried his hand at consulting. He’s published dozens of articles in industry magazines and research conferences. Jeremy earned a B.S. from New Mexico Tech and a M.S. from Purdue University.

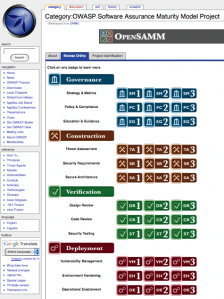

Browse the model online

Posted by Pravir Chandra in Discussion on May 4th, 2009

Over the weekend, we managed to get large parts of the SAMM content imported into the OWASP wiki so that folks can browse the model online. This will also support community contributions for additional material that maps under the SAMM activities. It’ll also help for folks making mappings to existing regulatory standards.

The official SAMM releases going forward will still be made in PDF form for mass distribution. The wiki version will syndicate some of the content for easy online referencing, but the PDF version is still the authoritative source of SAMM information.

Hardcopies available on Lulu.com

Posted by Pravir Chandra in Discussion, Press on April 23rd, 2009

In preparation for the upcoming OWASP conference in Poland, we were asked to help get the 1.0 release up on Lulu.com so that a copy can be printed for each attendee! So, we’ve put up the SAMM 1.0 release and it’s now available for purchase. That means you can purchase professional hardcopies, delivered right to your door, which is pretty handy. Even though I’m partial to the color version, there’s a more economical black & white version available too.

In preparation for the upcoming OWASP conference in Poland, we were asked to help get the 1.0 release up on Lulu.com so that a copy can be printed for each attendee! So, we’ve put up the SAMM 1.0 release and it’s now available for purchase. That means you can purchase professional hardcopies, delivered right to your door, which is pretty handy. Even though I’m partial to the color version, there’s a more economical black & white version available too.

SAMM Mailing List

Posted by Pravir Chandra in Discussion on March 23rd, 2009

We’ve got a project mailing list setup through the OWASP mailing list manager. You can:

- subscribe at https://lists.owasp.org/mailman/listinfo/samm

- read archives at https://lists.owasp.org/pipermail/samm/

- post at samm@lists.owasp.org

Next SAMM release coming this week

Posted by Pravir Chandra in Discussion on March 23rd, 2009

There’s been a huge amount of feedback and lots of refinement to SAMM since the Beta was release last August. I’m happy to report that we’re putting the finishing touches and reviews on the next release as I write. I’ll put together some separate posts that discuss the rationale behind the major changes, but in general, here are some new features in the next release:

- Better introduction – there’s a proper Executive Summary and a section describing the structure of the model before diving into the details

- A section on assessing an existing assurance program – this should help folks that need to map an existing software security program into SAMM (or anyone just performing an assessment of a software security program in general)

- Better guidance on building assurance programs – the Beta had some short text, but the next release includes a bigger section on and building a roadmap for a particular organization

- New layout and design – revamped the ordering of SAMM materials based on feedback from users and there’s a new topical table of contents (to better route people through the resource provided)

I’m looking forward to feedback on the 1.0 release once it’s out this week… stay tuned!

What’s up with the other model?

Posted by Pravir Chandra in Discussion on March 6th, 2009

A day or two back, Cigital and Fortify just released another maturity model named the Building Security In Maturity Model (BSI-MM). I’ve had lots of folks ask me about it and how it’s related to SAMM, so I figured I should write a post about it. The short answer: they’re different (BSIMM forked from the SAMM Beta). The long answer? Keep reading…

So, a long time ago in a galaxy far… ahem… actually, it was last July (2008). Brian Chess and I had a drink at RSA and discussed what I’d be doing with my time now that I’d left Cigital to start independent consulting. I was really focused on using my new found spare time to build the next revision to CLASP. In my vision (which I talked about as early as the OWASP EU conference in Milan in May of 2007), there would be a model that both demonstrated how to logically improve individual security functions over time as well as a collection of prescriptive roadmaps based on the organization type.

Brian and Fortify gave me contract to fund development of what would become the SAMM Beta. Once the Beta was complete last August, Gary McGraw (who sits on Fortify’s Technical Advisory Board) got word of SAMM and wanted to get Cigital involved. We had one meeting for Cigital to provide feedback on SAMM, but it was clear to me that they wanted to take the model in a different direction than I had wanted (lots of reasons here, but one objection I had was use of branding/marketing terminology). So, we forked.

Gary, Brian, and Sammy (and maybe others) massaged the high-level framework from SAMM into what they call their Software Security Framework (SSF). They took this out to 9 big companies with advanced secure development practices to get feedback on what those companies are actually doing. Though I really liked the idea of collecting that data, I wasn’t involved at all. Based on what they learned from SAMM and what they heard from those 9, they created the BSI-MM. So, even though the models may seem similar in structure, they’re different in terms of content.

Just as a disclaimer on the current state of things, I have not worked with the folks at Cigital, but I’m still actively collaborating with folks at Fortify who are supporting both models (and maybe others too!). If folks are interested, I’ll write up more about SAMM vs. BSI-MM once the next release of SAMM comes out next week.

Shiny new website

Posted by Pravir Chandra in Discussion on March 4th, 2009

Well, it was time to trade-in the quick and dirty website we stood up for the SAMM Beta. In exchange, we’ve now got a real workhorse, WordPress. Now people can leave comments and discuss proposed changes right here on the site. It’s also a really good platform for building other nifty tools into the site, but more on that later.